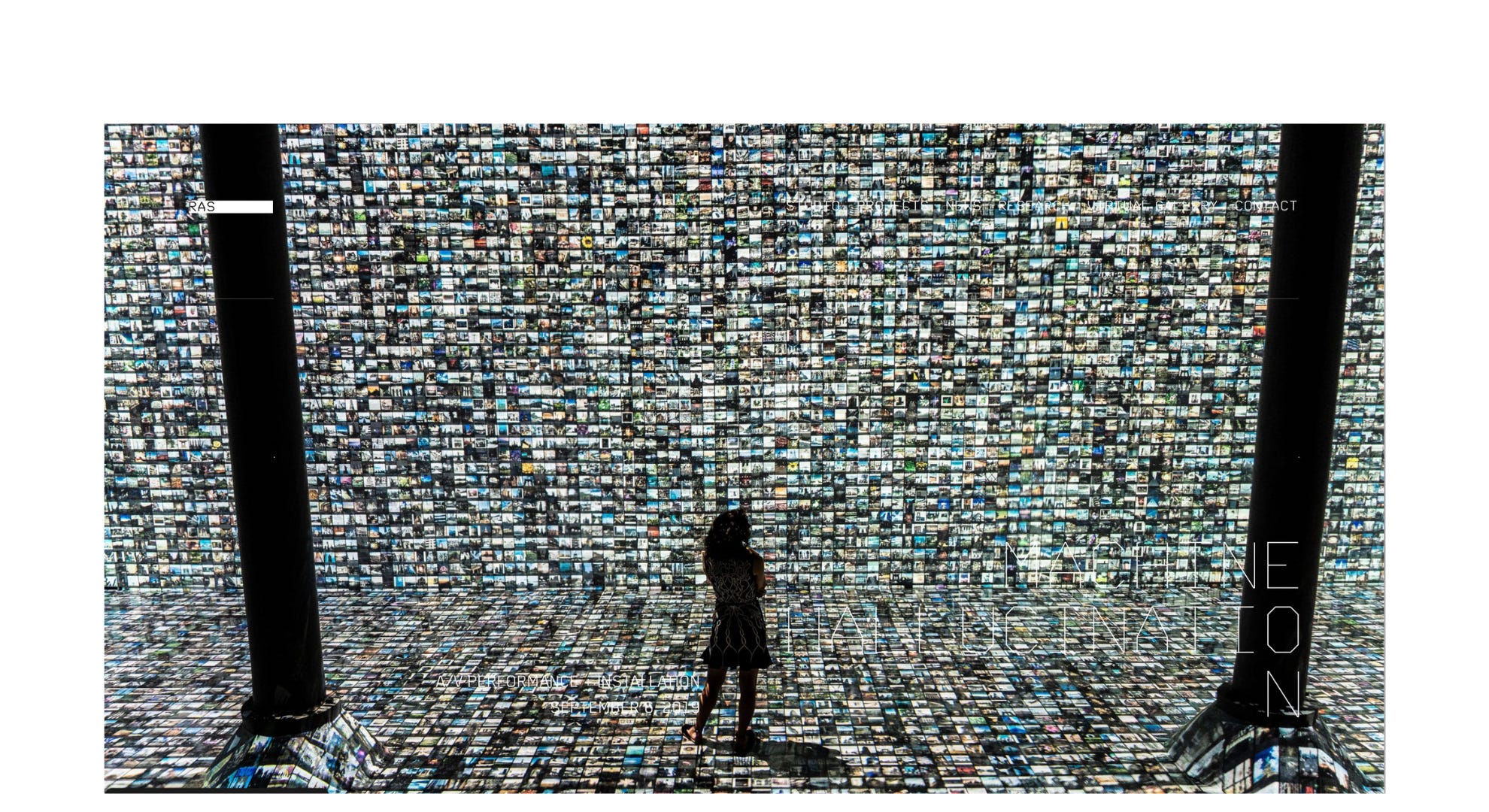

Yeah, AIs “hallucinate.” So do you!

Not that there's anything wrong with that. I do too. We all do. We're assessment-making beings—constantly, inescapably constructing interpretations of events, people, motives, ourselves, etc.

We can do no other. (As Peter Yaholkovsky puts it, "I rarely make assessments; they're just already there!") We encounter the wor…

Keep reading with a 7-day free trial

Subscribe to Gil Friend on STRATEGIC sustainability to keep reading this post and get 7 days of free access to the full post archives.